The most wonderful thing about YouTube is you can use it to learn just about anything.

One of the 10,000 annoying things about YouTube is finding a good, satisfying version of the lesson you want to learn can take hours of searching. This is especially true of videos about technical aspects of machine learning. Of course there are one- and two-hour recordings of course lectures by computer science professors. But I’ve been seeking out shorter videos with more animations and illustrations of concepts.

Understanding what a neural network is and how it processes data is necessary to demystifying machine learning. Data goes in, results come out — but in between is a “black box” consisting of code and hardware. It sort of works like a human brain, and yet, it really doesn’t.

So here at last is a painless, math-free video that walks us through a neural network. The particular example shown uses the MNIST dataset, which consists of 70,000 images of handwritten digits, 0–9. So the task being performed is the recognition of those digits. (This kind of system can be used to sort mail using postal codes, for example.)

What you’ll see is how the first layer (a vertical line of circles on the left side) represents the input. If each of the MNIST images is 28 pixels wide by 28 pixels high, then that first layer has to represent 784 pixels and each of their color values — which is a number. (One image is the input — only one at a time.)

The final vertical layer, all the way to right side, is the output of the neural network. In this example, the output tells us which digit was in the input — 0, 1, 2, etc. To see the value in this, go back to the mail-sorting idea. If a system can read postal codes, it recognizes several numbers and then transmits them to another system that “knows” which postal code goes to which geographical location. My letter gets sorted into the Florida bin and yours into the bin for your home.

In between the input and the output are the vertical “hidden” layers, and that’s where the real work gets done. In the video you’ll see that the number of circles — often called neurons, but they can also be called just units — in a hidden layer might well be less than the number of units in the input layer. The number of units in the output layer can also differ from the numbers in other layers.

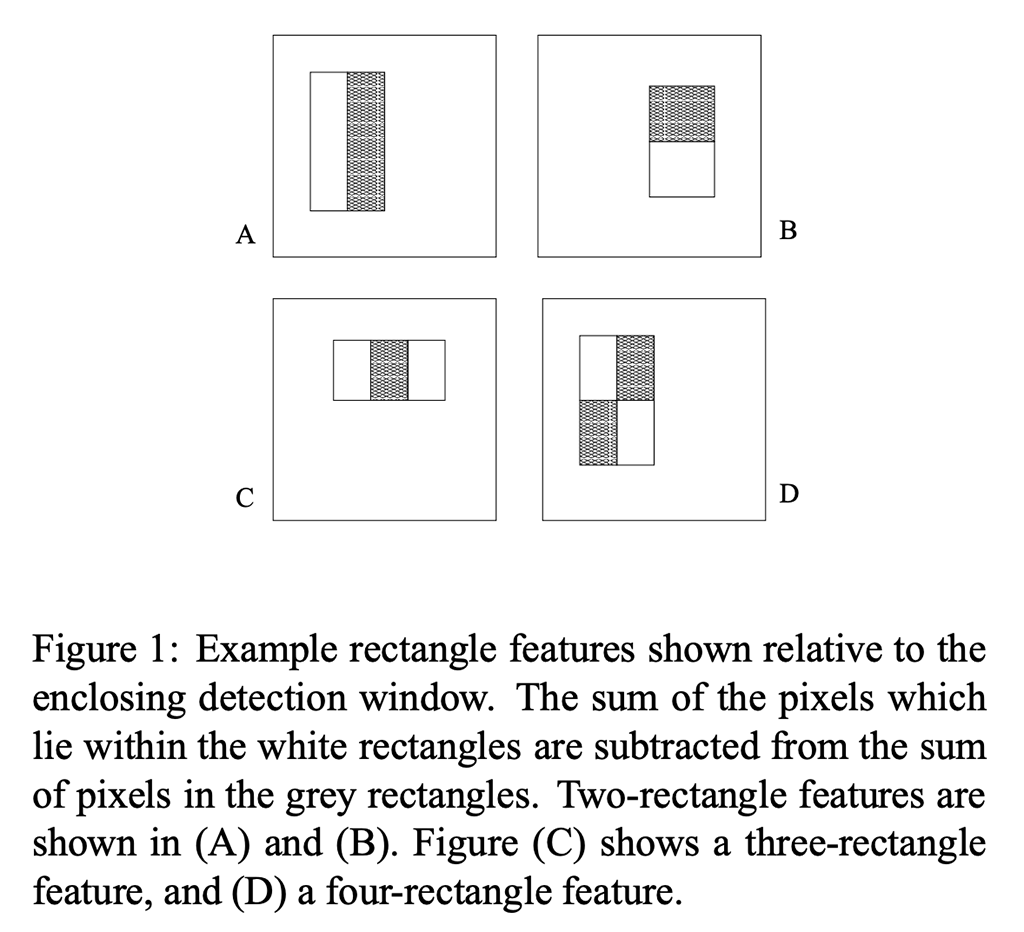

When the video describes edge detection, you might recall an earlier post here.

Beautifully, during an animation, our teacher Grant Sanderson explains and shows that the weights exist not in or on the units (the “neurons”) but in fact in or on the connections between the units.

Okay, I lied a little. There is some math shown here. The weight assigned to the connection is multiplied by the value of the unit to the left. The results are all summed, for all left-side units, and that sum is assigned to the unit to the right (meaning the right side of that one connection).

The video bogs down just a bit between the Sigmoid squishification function and applying the bias, but all you really need to grasp is that the value of the right-side unit shows whether or not that little region of the image (in this case, it’s an image) has a significant difference. The math is there to determine if the color, the amount of color, is significant enough to count. And how much it should count.

I know — math, right?

But seriously, watch the video. It’s excellent.

“And that’s a lot to think about! With this hidden layer of 16 neurons, that’s a total of 784 times 16 weights, along with 16 biases. And all of that is just the connections from the first layer to the second.”

—Grant Sanderson, But what is a neural network? (video)

Sanderson doesn’t burden us with the details of the additional layers. Once you’ve seen the animations for that first step — from the input layer through the connections to the first hidden layer — you’ll have a real appreciation for what’s happening under the hood in a neural network.

In the final 6 minutes of this 19-minute video, you’ll also learn how the “learning” takes place in machine learning when a neural net is involved. All those weights and bias values? They are not determined by humans.

“Digging into what the weights and biases are doing is a good way to challenge your assumptions and really expose the full space of possible solutions.”

—Grant Sanderson, But what is a neural network? (video)

I confess it does get rather mathy at the end, but hang on through the parts that are beyond your personal math background and listen to what Sanderson is telling us. You can get a lot out of it even if the equation itself is like hieroglyphics to you.

The video content ends at 16:26, followed by the usual “subscribe to my channel” message. More info about Sanderson and his excellent videos is on his website, 3Blue1Brown.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.