I finished reading this book back in April, and I’d like to revisit it before I read a couple of new books I just got. This was published in 2018, but that’s no detriment. The author, Hannah Fry, is a “mathematician, science presenter and all-round badass,” according to her website. She’s also a professor at University College London. Her bio at UCL says: “She was trained as a mathematician with a first degree in mathematics and theoretical physics, followed by a PhD in fluid dynamics.”

The complete title, Hello World: Being Human in the Age of Algorithms, doesn’t sound like this is a book about artificial intelligence. She refers to control, and “the boundary between controller and controlled,” from the very first pages, and this reflects the link between “just” talking about algorithms and talking about AI. Software is made of algorithms, and AI is made of software, so there we go.

In just over 200 pages and seven chapters simply titled Power, Data, Justice, Medicine, Cars, Crime, and Art, this author organizes primary areas of concern for the question of “Are we in control?” and provides examples in each area.

Power. I felt disappointed when I saw this chapter starts with Deep Blue beating world chess champion Garry Kasparov in 1997 — but my spirits soon lifted as I saw how she framed this example as the way we perceive a computer system affects how we interact with it (shades of Sherry Turkle and Reeves & Nass). She discusses machine learning and image recognition here, briefly. She talks about people trusting GPS map directions and search engines. She explains a 2012 ACLU lawsuit involving Medicaid assistance, bad code, and unwarranted trust in code. Intuition tells us when something seems “off,” and that’s a critical difference between us and the machines.

Algorithms “are what makes computer science an actual science.”

—Hannah Fry, p. 8

Data. Sensibly, this chapter begins with Facebook and the devil’s bargain most of us have made in giving away our personal information. Fry talks about the first customer loyalty cards at supermarkets. The pregnant teenager/Target story is told. In explaining how data brokers operate, Fry describes how companies buy access to you via your interests and your past behaviors (not only online). She summarizes a 2017 DEFCON presentation that showed how supposedly anonymous browsing data is easily converted into real names, and the dastardly Cambridge Analytica exploit. I especially liked how she explains how small the effects of newsfeed manipulation are likely to be (based on research) and then adds — a small margin might be enough to win an election. This chapter wraps up with China’s citizen rating system (Black Mirror in reality) and the toothlessness of GDPR.

Justice. First up is inequality in sentences for crimes, using two U.K. examples. Fry then surveys studies where multiple judges ruled on the same hypothetical cases and inconsistencies abounded. Then the issues with sentencing guidelines (why judges need to be able to exercise discretion). So we arrive at calculating the probability that a person will “re-offend”: the risk assessment. Fry includes a nice, simple decision-tree graphic here. She neatly explains the idea of combining multiple decision trees into an ensemble, used to average the results of all the trees (the random forest algorithm is one example). More examples from research; the COMPAS product and the 2016 ProPublica investigation. This leads to a really nice discussion of bias (pp. 65–71 in the U.S. paperback edition).

Medicine. Although image recognition was mentioned very briefly earlier, here Fry gets more deeply into the topic, starting off with the idea of pattern recognition — and what pattern, exactly, is being recognized? Classifying and detecting anomalies in biopsy slides doesn’t have perfect results when humans do it, so this is one of the promising frontiers for machine learning. Fry describes neural networks here. She gets into specifics about a system trained to detect breast cancer. But image recognition is not necessarily the killer app for medical diagnosis. Fry describes a study of 678 nuns (which previously I’d never heard about) in which it was learned that essays the nuns had written before taking vows could be used to predict which nuns would have dementia later in life. The idea is that an analysis of more data about women (not only their mammograms) could be a better predictor of malignancy.

“Even when our detailed medical histories are stored in a single place (which they often aren’t), the data itself can take so many forms that it’s virtually impossible to connect … in a way that’s useful to an algorithm.”

—Hannah Fry, p. 103

The Medicine chapter also mentions IBM Watson; challenges with labeling data; diabetic retinopathy; lack of coordination among hospitals, doctor’s offices, etc., that lead to missed clues; privacy of medical records. Fry zeroes in on DNA data in particular, noting that all those “find your ancestors” companies now have a goldmine of data to work with. Fry ends with a caution about profit — whatever medical systems might be developed in the future, there will always be people who stand to gain and others who will lose.

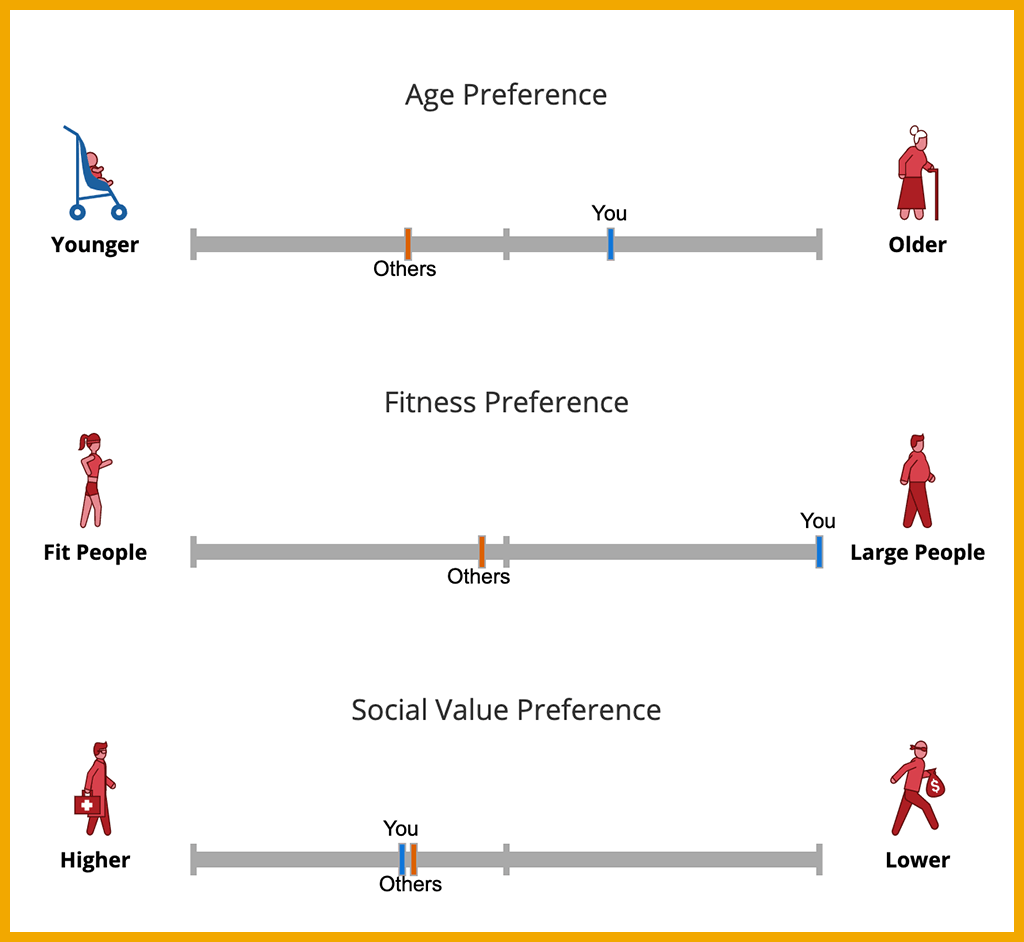

Cars. I’m a little burnt out of the topic of self-driving cars, having already read a lot about them. I liked that Fry starts with DARPA and the U.S. military’s longstanding interest in autonomous vehicles. I can’t agree with her that “the future of transportation is driverless” (p. 115). After discussing LiDAR and the flaws of GPS and conflicting signals from different systems in one car, Fry takes a moment to explain Bayes’ theorem, saying it “offers a systematic way to update your belief in a hypothesis on the basis of evidence,” and giving a nice real-world example of probabilistic inference. And of course, the trolley problem. She brings up something I don’t recall seeing before: Humans are going to prank autonomous vehicles. That opens a whole ‘nother box of trouble. Her anecdote under the heading “The company baby” leads to a warning: Always flying on autopilot can have unintended consequences when the time comes to fly manually.

Crime. This chapter begins with a compelling anecdote, followed by a neat historical case from France in the 1820s, and then turns to predictive policing and all its woes. I hadn’t read about the balance between the buffer zone and distance decay in tracking serial criminals, so that was interesting — it’s called the geoprofiling algorithm. I also didn’t know about Jack Maple, a New York City police officer, and his “Charts of the Future” depicting stations of the city’s subway system, which evolved into a data tool named CompStat. I enjoyed learning what burglaries and earthquakes have in common. And then — PredPol. There have been thousands of articles about this since its debut in 2011, as Fry points out. Her summary of the issues related to how police use predictive policing data is quite good, compact and clear. PredPol is one specific product, and not the only one. It is, Fry says, “a proprietary algorithm, so the code isn’t available to the public and no one knows exactly how it works” (p. 157).

“The [PredPol] algorithm can’t actually tell the future. … It can only predict the risk of future events, not the events themselves — and that’s a subtle but important difference.”

—Hannah Fry, p. 153

Face recognition is covered in the Crime chapter, which makes perfect sense. Fry offers a case where a white man was arrested based on incorrect identification of him from CCTV footage at a bank robbery. The consequences of being the person arrested by police can be injury or death, as we all know — not to mention the legal expenses as you try to clear your name after the erroneous arrest. Even though accuracy rates are rising, the chances that you will match a face that isn’t yours remains worrying.

“How do you decide on that trade-off between privacy and protection, fairness and safety?”

—Hannah Fry, p. 172

Art. Here we have “a famous experiment” I’d never heard of — Music Lab, where thousands of music fans logged into a music player app, listened to songs, rated them, and chose what to download (back when we downloaded music). The results showed that for all but the very best and very worst songs, the ratings by other people had a huge influence on what was downloaded in different segments of the app. A song that became a massive hit in one “world” was dead and buried in another. This leads us to recommendation engines such as those used by Netflix and Amazon. Predicting how well movies would do at the box office, turned out to be badly unreliable. The trouble is the lack of an objective measure of quality — it’s not “This is cancer/This is not cancer.” Beauty in the eye of the beholder and all that. A recommendation engine is different because it’s not using a quality score — it’s matching similarity. You liked these 10 movies; I like eight of those; chances are I might like the other two.

Fry goes on to discuss programs that create original (or seemingly original) works of art. A system may produce a new musical or visual composition, but it doesn’t come from any emotional basis. It doesn’t indicate a desire to communicate with others, to touch them in any way.

In her Conclusion, Fry returns to the questions about bias, fairness, mistaken identity, privacy — and the idea of the control we give up when we trust the algorithms. People aren’t perfect, and neither are algorithms. Taking the human consequences of machine errors into account at every stage is a step toward accountability. Building in the capability to backtrack and explain decisions, predictions, outputs, is a step toward transparency.

For details about categories of algorithms based on tasks they perform (prioritization, classification, association, filtering; rule-based vs. machine learning), see the Power chapter (pp. 8–13 in the U.S. paperback edition).

.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.