I recently found out that Kaggle has a set of free courses for learning AI skills.

The first course is an introduction to Python, and these are the course modules:

- Hello, Python: A quick introduction to Python syntax, variable assignment, and numbers

- Functions and Getting Help: Calling functions and defining our own, and using Python’s builtin documentation

- Booleans and Conditionals: Using booleans for branching logic

- Lists: Lists and the things you can do with them. Includes indexing, slicing and mutating

- Loops and List Comprehensions: For and while loops, and a much-loved Python feature: list comprehensions

- Strings and Dictionaries: Working with strings and dictionaries, two fundamental Python data types

- Working with External Libraries: Imports, operator overloading, and survival tips for venturing into the world of external libraries

Even though I’m an intermediate Python coder, I skimmed all the materials and completed the seven problem sets to see how they are teaching Python. The problems were challenging but reasonable, but the module on functions is not going to suffice for anyone who has little prior experience with programming languages. I see this in a lot of so-called introductory materials — functions are glossed over with some ready-made examples, and then learners have no clue how returns work, or arguments, etc.

At the end of the course, the learner is encouraged to join a Kaggle competition using the Titanic passengers dataset. However, the learner is hardly prepared to analyze the Titanic data at this point, so really this is just an introduction to how to use files provided in a competition, name your notebook, save your work, and submit multiple attempts. The tutorial gives you all the code to run a basic model with the data, so it’s really more a demo than a tutorial.

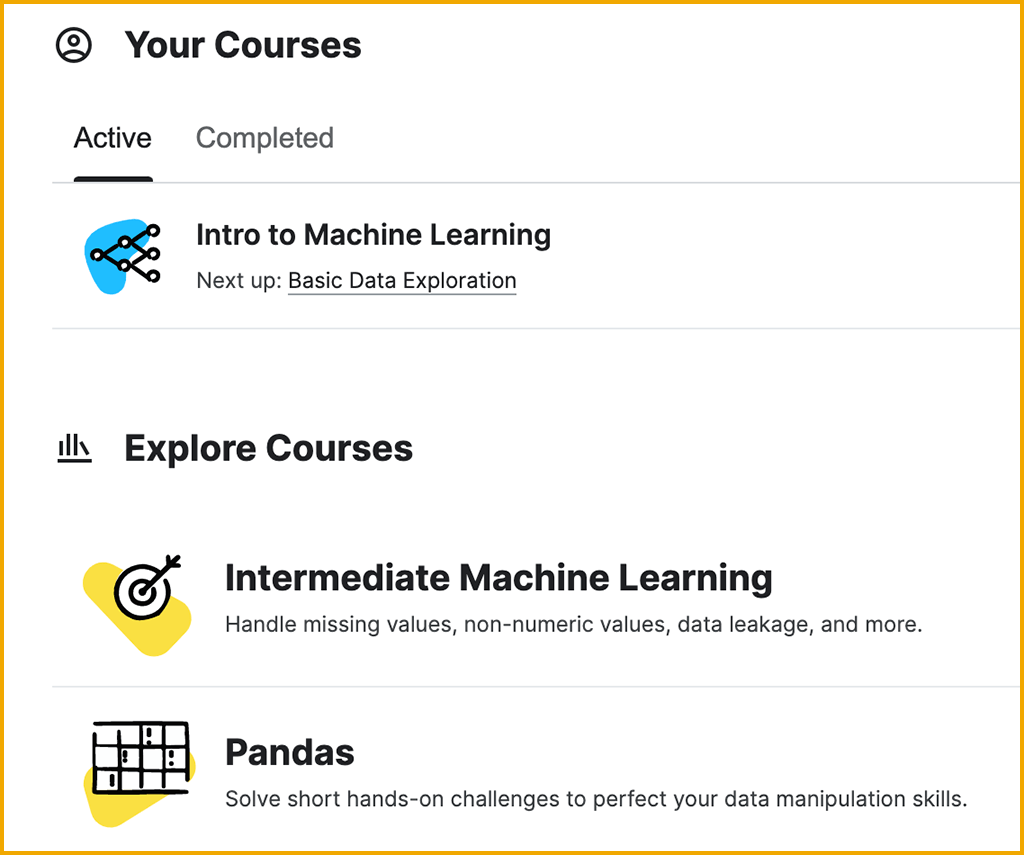

My main interest is in the machine learning course, which I’ll begin looking at today.

.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.