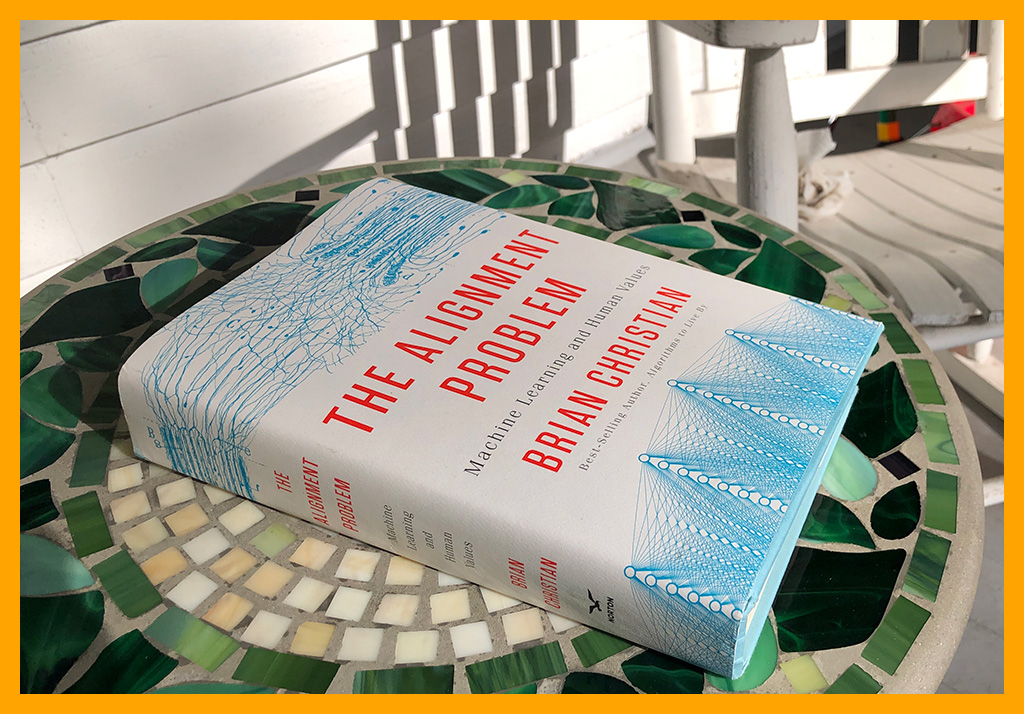

I’m not sure why I put aside the book The Alignment Problem: Machine Learning and Human Values, by Brian Christian (2020), with only two chapters left unread. Probably my work obligations piled up, and it languished for a few months on the “not finished” book stack. It certainly was not due to any fault in the book itself — it’s an excellent study of aspects of AI that are not commonly discussed in the general press. These issues are not obscure or unimportant (quite the opposite), and Christian’s style of storytelling is well suited to explaining them clearly. With my summer waning away, I finally went back and finished reading.

I read and enjoyed his earlier book Algorithms to Live By (co-authored with Tom Griffiths). That book also incorporated stories and anecdotes gleaned through one-on-one interviews. I’m impressed by the immense amount of time and effort that must have gone into this book — apart from all the reading and research that any proper nonfiction book requires, here the author also needed to attend numerous computer science and AI conferences, as well as schedule and complete interviews with dozens of researchers and other experts.

The result is a fascinating exploration of various facets of “the alignment problem,” which is the challenge of ensuring that AI systems are doing what we want them to do, doing what we think they are doing (which isn’t always easy to know), and doing things for the right reasons (that is, mirroring human values rather than, say, turning into HAL from 2001: A Space Odyssey).

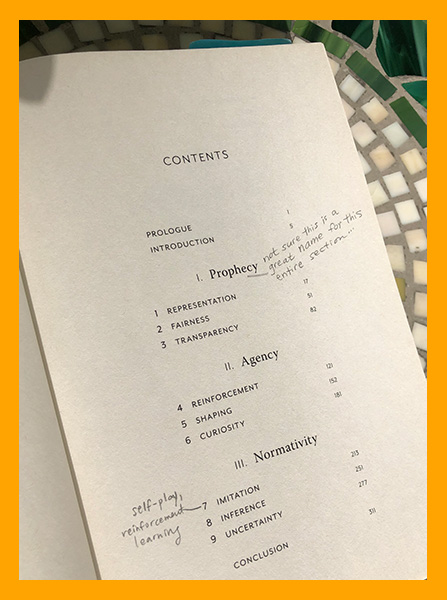

The book has three sections, titled Prophecy, Agency, and Normativity, and each section has three chapters. (I don’t like “prophecy” as a stand-in for “prediction” or “probability,” but that’s just me.) The Prophecy section was the most redundant for me and yet still interesting to read.

Predictions

1. Representation. We begin with Frank Rosenblatt and the perceptron, and how the promise was effectively sabotaged by Minsky and Papert (1969). Straight from there to AlexNet and how it crushed the ImageNet Challenge in 2012. Next, Google Photos labeling a photo of Black people as “gorillas” (2015–18). An excellent history of how the technology of photography misrepresents Black people. Bias, training data, and the research of Joy Buolamwini. From images we move on to language models/word embeddings, still exploring bias. New to me was the significance of reaction time in word-association tests on human subjects — and how “the distance between embeddings in word2vec … uncannily mirrors the human reaction-time data” (p. 45). By the end of this chapter we understand how biases become part of models derived from machine learning, and thus how some representations are grossly inaccurate.

2. Fairness. This chapter focuses largely on the COMPAS product (used in bail, parole, and sentencing decisions in the U.S. justice system), but it begins with the classification of parolees in Illinois in 1927. We see how statistical models were used to make decisions about people in the prison system long before any application of machine learning was possible. What emerges from the discussion of the 2016 ProPublica investigation of COMPAS is that when the base rates for two groups are different (here, the base rate of recidivism for offenders who are white and those who are Black), the risk estimates will skew according to that difference. That means the group with higher recidivism, historically, will be predicted to have a higher risk of recidivism now and in the future. Not fair, right? But if you calibrate the system to account for that, you’re bound to make it unfair in other ways. The COMPAS algorithm is “fair” in that it treats everyone the same according to their demographic’s base rate.

One segment in the chapter looks at data privacy and how removing attributes like race, age, and gender doesn’t actually protect the individual — because those and other attributes can still be derived from other variables (in our online behavior, for example). The term for this: redundant encodings. Summary quote: “Fairness through blindness doesn’t work” (p. 65). Christian notes how fairness, accountability, and transparency went from conference rejections in 2013 to a central research area in machine learning/AI by 2016. The result of all this is closer scrutiny of predictive models that affect people’s lives — scrutiny of what’s really being predicted, and also exactly what data the predictions are based on. In the case of COMPAS, the base rates are really for who gets re-arrested (who gets caught) rather than literally who commits new crimes.

3. Transparency. Beginning with an example from the practice of medicine, this chapter deals with the ability to see why an AI system is doing what it does. First Christian describes a rule-based decision system (good old-fashioned AI; that is, no machine learning involved): if the patient has these symptoms/prior conditions, do x. Then he describes a neural net that was trained on hospital data to recommend which pneumonia patients to admit for care. A researcher noted that the neural net had apparently learned a rule that said people with asthma should be sent home, not admitted. This illustrates how unexpected the pitfalls of training can be — in the past data, people with asthma had a high recovery rate from pneumonia, but that is precisely because they were admitted and received care, not because they were naturally more likely to recover.

The European Union’s GDPR law is discussed. It says EU citizens have the right to know why an algorithmic decision was made — if they were denied a bank loan, for example. This puts a burden on corporations and technology firms that they can’t always bear, because many machine learning systems don’t include any option to examine the components of a recommendation or prediction. It raises the question: If we can’t find out why the system made that recommendation, should we be using that system?

There’s a neat segment comparing human decision-making with decisions from machine algorithms: when using the same data, such as school test scores and class rank, the humans rely heavily on the data but are inconsistent in their recommendations. Research has shown again and again that when humans and machines base decisions on the same data (“codable input variables”), the human decisions are never superior to the machine’s, even in medicine (p. 93). A conclusion is that human experts know which features to look for (to make an assessment) but not how to “do the math.” (We’re too dependent on heuristics.) I was interested to learn that in at least one case, a model showed that data on patients’ medical histories yielded better predictions than data about their current symptoms (p. 101) — this was in a segment about selecting only relevant features and building a simple model, instead of a complex model using all of the possible data. Easier to have transparency in a simple model. However, not all problems allow for simple models.

Having the system generate more outputs is one way to increase transparency. For the pneumonia admission example, the predictions might include the likely cost of treatment and length of hospital stay, not only the likelihood of survival. Another technique for greater transparency is “deconvolution,” which allows researchers to view a visualization of what complex convolutional neural nets for image recognition are “paying attention to” in each of the hidden layers of the net. This can enable researchers to strip out certain layers that appear not to be adding much to the process. At the end of this chapter, Christian explores the idea of interpretability and using a separate computer system to extract the concepts (in a sense) that another system is relying on in making decisions (p. 115; see also this paper). The example given is stripes on a zebra: how important are the stripes to the system’s prediction that an image shows a zebra? On which layer does it account for the stripe pattern?

Agency

Now we move on to the the second section of the book, Agency.

4. Reinforcement. Beginning in work with animals and moving on to young humans (Skinnerism), reinforcement learning has a longer history than AI. I liked that Arthur Samuel’s checkers-playing program from the 1950s appears early in this chapter. Cybernetics, feedback, and entropy make an early appearance too. Soon we come to a U.S. Air Force–funded project and nearly 50 years of work by Andrew Barto and Richard Sutton. Mazes, games, scores, points, and the “reward hypothesis.” Christian acknowledges right away that not all decisions in real life have rewards. The connectedness of our choices, the way they change the state of play, the fact that it’s often impossible to know if the best choice was made at any juncture, but many non-optimal choices might still lead to the desired goal in the end. (So much messier than supervised learning with labeled data!) Two parts of the problem: the policy (what to do, when to do it) and the value function (rewards or punishment). Choosing an action means estimating the chances that it will lead to desired outcomes. Intermediate rewards are necessary — the system can’t have only one final payoff, such as winning the game at the end, or it will never learn to make good choices along the way (“learning a guess from a guess,” p. 140). Q-learning derived from Sutton and Barto’s work; it was first demonstrated in a backgammon program in the early 1990s that was “entirely ‘self-taught'” (p. 141) with self-play. Sutton and Barto called it temporal-difference (TD) learning — their algorithm would adjust the value function for future actions based on the result of each new action.

A segment on dopamine (in brains): fewer than 1 percent of our neurons can produce dopamine, but those neurons are connected to millions of others. Release of dopamine is pleasure! There was a mystery in early research: monkeys trained with a light or a bell to expect food would eventually experience a dopamine release at the cue and none at receiving the food itself. TD theory eventually unlocked the mystery: dopamine comes not from the reward itself but from the expectation of the reward. Christian describes this as a fluctuation in the value function: “suddenly the world seemed more promising than it had a moment ago” (p. 143; italics in original). The temporal-difference error arises when, for example, there is no food for the monkey — there is no reward (or a much smaller reward) where one was expected.

Christian goes on to say, “The effect on neuroscience has been transformative” (p.145) — TD theory is now applied in some studies of brain function. After a bit more about neuroscience and measuring (human) happiness, he closes the chapter with the question of how to structure rewards to get the results we want from an algorithmic system (dopamine not included). Kind of funny to think about that in the context of agency — the agent in (machine) reinforcement learning (the program) has no agency where the rewards are concerned.

5. Shaping. This continues the exploration of reinforcement learning. Shaping is a technique for getting the desired behavior using rewards, but specifically by rewarding approximations of the behavior. It originated with B. F. Skinner in a 1940s project involving pigeons. The animal (or machine learning system) is guided toward more and more accuracy via rewards for actions that get closer and closer to the exact behavior. It starts with trial and error, or flailing around and trying everything. The difficulty is when no reward comes, or rewards come too rarely (sparsity) — for example, only one button on a wall of 1,000 identical buttons is the right one to push. So first you give a reward for just pushing any button. Later you give rewards only for pushing buttons near the one correct button. Finally the only reward given is when that one special button is pushed. Thus the learner learns to push only that button, every time.

Christian gives the example of how video games subtly teach us how to play them, which I love, because I am continually impressed at how good some games are at training us through play, without instructions. There’s also the principle of training first with easier versions of the task — learn to catch a big, lightweight ball before trying to catch a baseball for the first time; learn to hit a slow pitch before you try a fast pitch. Christian calls this curriculum. He also refers to animal-training techniques developed by Marian Breland Bailey and her first husband, Keller Breland (their story is told in an open-access article from 2005). Determining the intermediate steps (what are the best early tasks?) is not trivial. Video games pose challenges on each level that are achievable but also, often, at the outer limit of what we’re able to do at that point in the game.

“What makes games so hypercompelling is how well shaped they are. The levels are a perfect curriculum.”

—Brian Christian (p. 175)

Apart from curriculum, you might only use the full task or problem, but build in lots of rewards at the start, like the button-pushing example above. This is the incentives technique. Gradually the incentives are changed to be more centered on the actual goal. Poor outcomes can result when the subject finds ways to get the reward without progressing toward the ultimate goal. “Rewarding A while hoping for B” can backfire (p. 164). One principle is to give the reward for the state of the game, or environment, rather than for the action performed. So pushing the same button repeatedly gets no additional rewards, or kicking a ball such that it lands farther from the goal is punished with a point deduction.

At the end of this chapter, Christian discusses evolution (where the “reward” is survival of the species), the “optimal reward problem” (the reward desired by the designer might not be the same as the reward assigned to the agent), and incentivization in real life, or gamification.

6. Curiosity. Origin story of the Arcade Learning Environment, which encoded hundreds of old Atari games into a single package that any researcher could use for training an AI system: This was a milestone because previously researchers had created their own games for training purposes, and there was no consistency. ALE, like the ImageNet dataset, allowed for comparisons among systems that had used the same dataset to learn. DeepMind put a convolutional neural net and Q-learning to the task, with excellent results on many of the Atari games (notably Breakout). A key accomplishment from using a ConvNet (or CNN) was that the neural net determined which features were important in each game (article, 2015). The game on which the DeepMind system was least successful, Montezuma’s Revenge, was the type where the player has to explore a large environment and solve puzzles to enter new rooms. The rewards are sparse, and the player dies often.

To solve a game like Montezuma’s Revenge, a player needs to be curious and intrinsically motivated. You aren’t just shooting things and racking up points. Being motivated by curiosity — a desire to find out what comes next, or how something works — is much harder to simulate in a machine than the desire to get a more tangible reward. What sparks curiosity? New situations (novelty) and surprise (the unexpected), among other things.

A system that performed much better on Montezuma’s Revenge was one with an added “density model” that contained all previously encountered views of the game environment. The model yields a prediction of how unfamiliar — or novel — the current view is (compared with all the past views); the agent is rewarded for finding novel views, thus incentivizing getting out of the same-old, same-old and into a new room or level in the game (p. 192).

Surprise can mean you encountered an unexpected result, not just a new location. It’s tricky with reinforcement learning because you want the agent to learn that a particular action is “good” (and gets a reward) so that the action will be repeated. But you also want the agent to discover new actions, or a new context for an action — so you also build in rewards for these discoveries. Using this rationale, a team from OpenAI developed the random network distillation (RND) bonus, which resulted in a system that actually completed Montezuma’s Revenge (paper, 2018).

It turns out that giving points for intrinsic rewards can yield better results (at least in video games) than points for the usual (extrinsic) stuff, like shooting bad guys and collecting gold nuggets.

In a segment about boredom and addiction, we learn that intrinsically motivated agents sometimes just give up when they are stuck, like humans. We also find out that novelty and surprise elicit dopamine release — of course, since they seem to promise something interesting coming up.

Normativity: Learning the norms

The final section, Normativity, held the most new material for me.

7. Imitation. Humans are great imitators, almost from birth. If we learn by imitating, why shouldn’t machines? The first hurdle to consider is over-imitation, which is including unnecessary actions or steps that were in the exemplar; human children recognize these as unnecessary but might attribute intentionality to them. Advantages of learning by imitation include efficiency, possible greater safety, and learning things that are hard to describe in words — showing instead of telling. (If you can’t describe all the steps, how could you program them in code?) Examples of early self-driving vehicles are discussed. A big challenge is learning how to recover from mistakes if the exemplar never made any mistakes. Another is new situations that were never demonstrated. A third is “cascading errors,” which can arise from the previous two challenges. A solution is to put the exemplar, or teacher, back in the loop. Step in, take the wheel when things start to go wrong, and the machine system learns what to do in those situations too.

There’s a lot to be considered in what is demonstrated, what is shown or enacted that we want the machine to imitate — the core of the alignment problem. A human performing a task such as driving a car might be considering possible outcomes of current actions, and act accordingly, but those considerations are opaque to any observer, including an AI system learning by imitation. Developers can choose to emphasize, or encode, either the expected reward(s) from an action or a value based on all possible rewards, whether rare or common. (Do we want the machine to assume people usually do not step off the curb into the path of a moving car?)

Then we come to self-play, which was part of Arthur Samuel’s checkers-playing program and later a key to the success of AlphaGo Zero. If the system (encoded with the rules of the game, forbidden moves, etc.) plays itself, not only can it improve more rapidly than by playing human opponents; it can also exceed the skill levels of its own programmers. Limited to imitation, the system might never progress beyond what it has been shown. Christian describes the functions of AlphaGo Zero’s “policy network” during self-play, adding that this machine learning process is called amplification.

Imitation and learning values/policy in the wild seem like the way to go when the task is too complex to explain in detail, to code out completely. Life is not a board game, however. The rules themselves are too complex, based in morality and ethics — human values.

“In the moral domain … it is less clear how to extend imitation, because no such external metric exists.”

—Brian Christian (p. 247)

8. Inference. Humans (even very young humans) can figure out that someone needs help. We can infer others’ goals. We can work together, collaborate, without having every step spelled out for us. Christian says researchers are looking at inference as a way to instill human values in machine systems, using inverse reinforcement learning (IRL). The system needs to infer the reward, from observing the demonstrated behavior, instead of learning the behavior because of getting a reward. Christian calls it “one of the seminal and critical projects in twenty-first century AI” (p. 255).

The system doesn’t need to name the reward (the goal), but it’s got to learn how to reach that goal without ever being told what the goal is, without receiving a designated reward. Examples concern Andrew Ng’s work with autonomous helicopters (large, expensive ones, but not large enough to carry a human) around 2008 (details and video), in which a system learned to perform a difficult trick move, the chaos: a pirouetting flip in which the axis changes throughout, such that the helicopter makes a sphere in the air. Very few human experts who fly these model choppers can successfully complete the maneuver. The key idea here is that the system could infer what the human operator wanted (the goal) even though there were repeated failures and few successes. Note, the system also learned to complete more basic maneuvers that had not been achieved by earlier ML systems. A good explanation from 2018: Learning from humans: what is inverse reinforcement learning?

Another example is kinesthetic teaching, in which a system infers the goal from observing the movements of a robot arm that is controlled by a human. This kind of observing doesn’t mean watching, with machine vision, but rather experiencing the movements. I was reminded of this video (start at 2:32), in which a small robot arm constructs a model of itself by “flailing” — trying out all the possible ways it can move itself. (Although that’s not the same as having a human move the arm through the prescribed motions, it is a way to enable the robot to learn its own capabilities.)

Without an expert on hand to perform the tasks we want the robot to learn, we might train the system using feedback from an observer. Christian describes a groundbreaking AI safety project from 2017 in which the system would send random video clips to its human “evaluators,” and one of two video clips would be tagged as better than the other — like saying, “Here, you’re on the right track.” Again, a key aspect of this reinforcement training is that no score exists. Unlike playing a video game, the system cannot rack up points. I think it’s important to mention that the “robots” are performing within a simulation, with gravity and so on in force, so the video clips are recordings of the simulated robot in the simulated environment. Using this method, a robot was successfully trained to perform a backflip (paper).

This is exciting — if an AI system can learn “best behavior” by flailing and receiving feedback from human observers, maybe it’s possible to train for different kinds of tasks for which it would be impossible to write out explicit rules.

Cooperative inverse reinforcement learning (CIRL) takes into account the machine working with the human. This is almost super-alignment, because it’s not about getting the AI system to have your goal for itself but rather to achieve the goal you want for yourself. Christian’s effective example is a person reaching for a thing that is out of reach (me, with the high shelves in the supermarket): a robot doesn’t need to want the thing you’re reaching for. It should recognize its goal as getting for you what you can’t get for yourself. To achieve this, we’re going to need to deliberately teach the systems. “The insights of pedagogy and parenting are being quickly taken up by computer scientists,” Christian says (p. 270). This kind of learning also requires more interaction, more back-and-forth between the humans and the machine.

Christian raises a concern regarding these paired systems, our possible robot or software helpmates of the future: Not everything we want is good for us — and not everything a corporation wants us to do is good for us. Alignment with our desires might not be in alignment with our best interests.

9. Uncertainty. This chapter begins with the frightening story of a near-disaster in 1983, when a Soviet lieutenant colonel made a very human judgment call and likely saved the world from nuclear annihilation. The point is that computer systems (such as … nuclear-warning systems) are not perfect, and human intuition has more than once averted catastrophe.

There’s a relationship between adversarial attacks on image-recognition systems and the ability of researchers to create digital images that (to humans) show only a jumble of random pixels but that an AI system “recognizes” with 99 percent certainty as an ostrich, or a stop sign. Both types of error happen because the ML training process produces an ability to recognize patterns of pixels. The “open category problem” refers to the training process for these systems: They are trained to “recognize” some number of things in digital images — say 1,000 things, or 10,000 — but there are millions of things in the world. An image-recognition system is going to give you its best guess, but it only “knows” the things it was trained to know.

Getting the system to admit it does not know what a thing is — this is a kind of frontier in today’s research. If your system is recognizing pre-cancerous moles and you give it a photo of a pizza, you want it to say, “That’s probably not a mole at all.”

Bayesian neural networks were explored and sort of abandoned in the 1980s and ’90s because they couldn’t scale. Instead of a fixed weight on a connection for each unit in the neural net (as in a non–Bayesian NN), there would be a range of values for each weight. Because of the range, you might get a different output for the same input after training was completed. If most outputs matched, reliability would be high. Varied outputs (disagreement) would be akin to the system saying, “I don’t know what that is.” Note, researchers can model Bayesian NNs by training separate conventional models, separately. They run the same input through each model and compare the outputs. A lack of matching outputs: “We don’t know what that is.” A group of models like this is an ensemble. But — you don’t really need separately trained models (if I’m understanding this segment correctly); all you need to do is disable some layers of the trained NN, get your output, and then disable different layers and run the data again. This technique, known as dropout, goes all the way back to AlexNet in 2012 (p. 285). Apparently it’s just as effective for reporting uncertainty as a bona fide Bayesian NN. Christian calls it a dropout-based uncertainty measure, and it’s been effective for recognizing unhealthy human retinas and for regulating the speed of autonomous vehicles (in case of uncertainty, slow down).

When uncertainty is present, we want systems to be cautious. Some decisions are more weighty than others; Christian discusses the interpretation of a “do not resuscitate” order. (If in doubt, resuscitate.) He characterizes the challenge as “measuring impact,” and again we’re looking at a very human kind of judgment call, based on human experiences, ethics, and so on. What would be the impact of a bad call? This segment made me think of the Prime Directive in Star Trek. (Starfleet personnel are forbidden to interfere with the natural development of alien civilizations. It’s already interference if you’ve landed on their planet!) There’s also the question of whether the result is reversible (irreversible == higher impact, but some irreversible actions are trivial, e.g. the apple is gone after you’ve eaten it). Keeping options open can be important — and how do we train the AI system to see the options and choose among them? I loved the references to Sokoban, a game I’ve played on many different platforms — but like so many other toy examples, it’s ridiculously simple compared to the real world. See AI Safety Gridworlds (2017).

Then we come to intervention. If anything goes wrong with an artificial intelligence system (running amok!), we must be able to intervene, right? (See nuclear near-disaster, above.) This is called corrigibility. But pulling the plug is not the answer. That’s why this is in the Uncertainty chapter — ideally, the system would shut itself down if necessary, and if “necessary” is in question, alert the humans. This goes into an almost chicken-and-egg situation: Will the machine let the human intervene? What if the human should not be permitted to intervene? What if the machine’s uncertainty is too low? Too high? What if the humans’ end goals are not entirely clear? (Protect the world at all costs, or protect the Soviet Union and to hell with everyone else?)

Now the difficulty in designing explicit reward functions becomes life-or-death. If the goal is protect the Soviet Union (and there’s no human intervention), we’re all dead. AI researchers are trying, essentially, to model the intuition of the human lieutenant colonel who reasoned that it was highly unlikely that the U.S. had fired five nuclear missiles at his country at that time. Uncertainty and the imperfection of the world and the infinite number of possible situations that might arise. Researchers working on inverse reward design (IRD) are allowing the system to second-guess the goals.

The final segment of the chapter, “Moral Uncertainty,” looks at the question, “What is the right thing to do when you don’t know the right thing to do?” Given the example of sin in religious belief systems, sometimes the rule is crystal clear, and sometimes it’s not. Turns out there’s a book (open access). Christian has gone off the rails into philosophy here, although it’s certainly interesting. I liked the final two pages where the philosopher Nick Bostrom came up, offering reasons why this seemingly esoteric stuff is not mere navel-gazing but actually important.

In conclusion

The book’s Conclusion is unusual in that it is a kind of extension of several of the individual chapters — not a summary so much as “Here’s what to look out for, in the future.” My takeaways are that more and more researchers are focusing on AI ethics and safety, which must be a good thing; the world is continuously changing, so AI models will always need updating; this book was published in 2020, and how can I keep up with what has happened in this field since then?

I think it’s tremendously important for more people to have more understanding of what’s going on with AI development — not just products and threats and dangers, but what questions the researchers are asking and how they are trying to find answers.

.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.