I found an old bookmark today and it led me to The Bitter Lesson, a 2019 essay by Rich Sutton, a computer science professor and research scientist based in Alberta, Canada. Apparently OpenAI engineers were instructed to memorize the article.

tl;dr: “Leveraging human knowledge” has not been proven effective in significantly advancing artificial intelligence systems. Instead, leveraging computation (computational power, speed, operations per second, parallelization) is the only thing that works. “These two need not run counter to each other, but in practice they tend to. Time spent on one is time not spent on the other.” Thus all efforts to encode how we think and what we know are just wasting precious time, damn it! This is the bitter lesson.

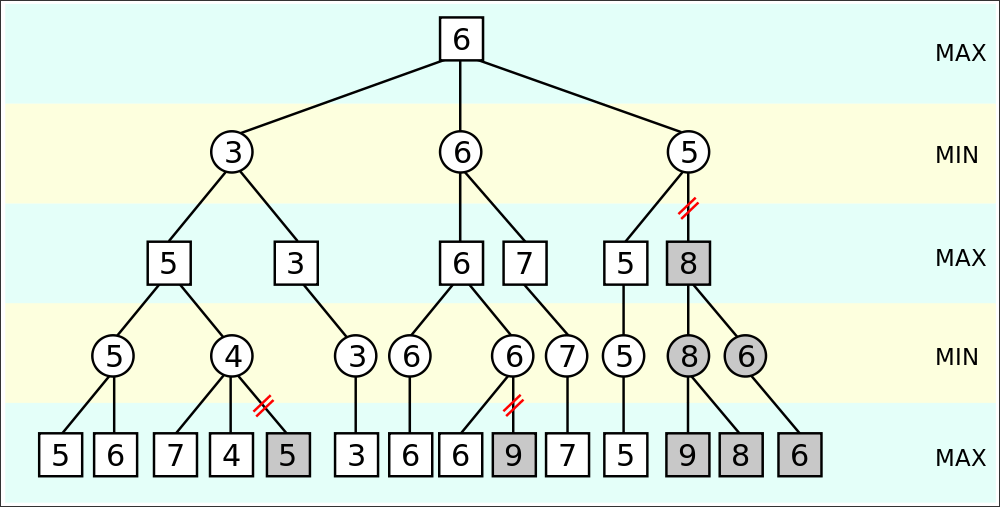

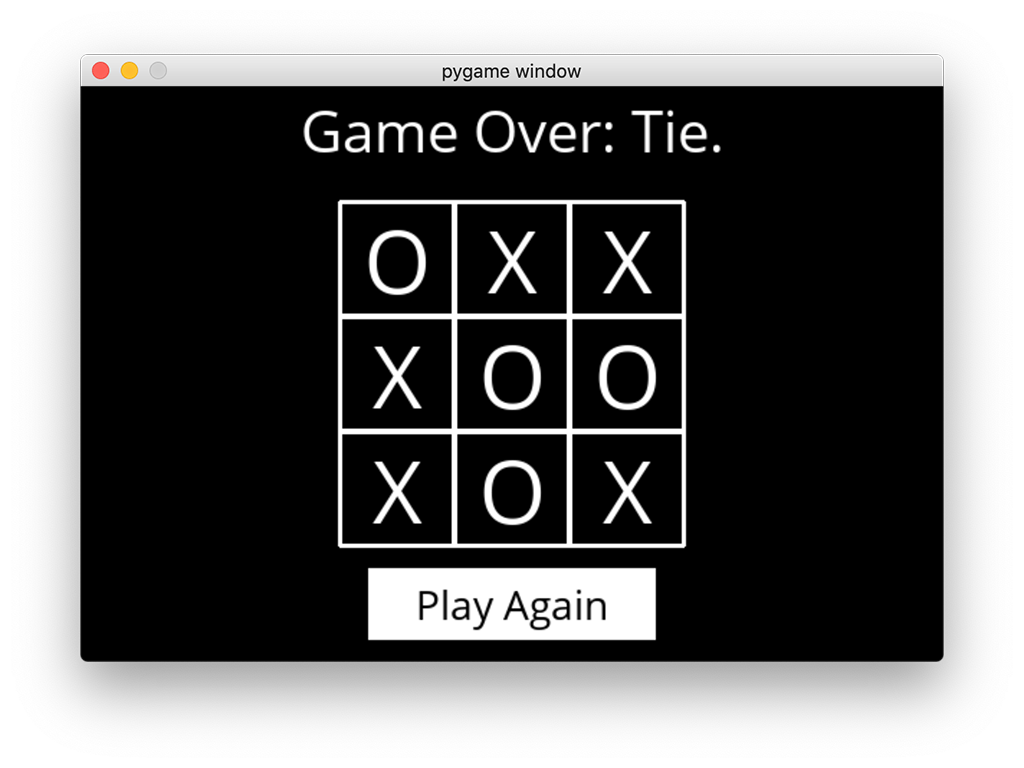

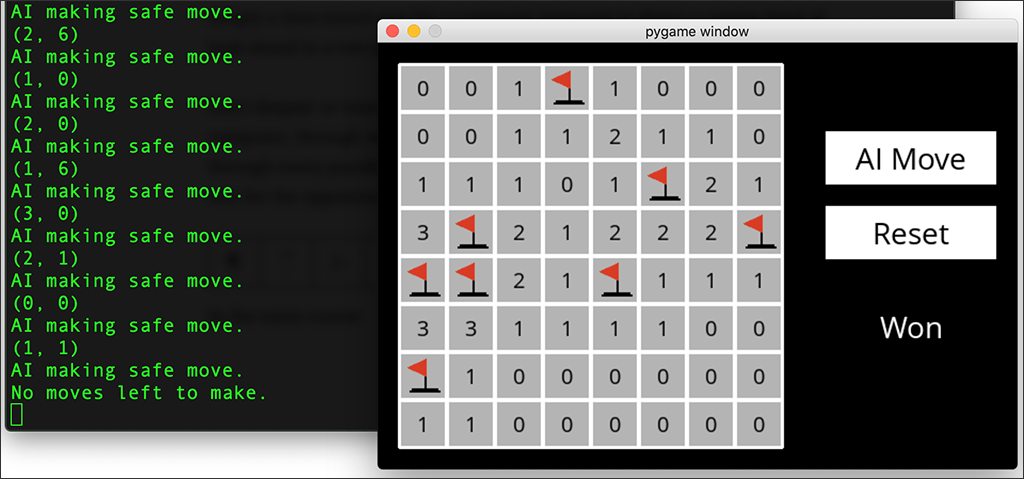

Sutton has worked extensively on reinforcement learning, so it’s not surprising that he mentions examples of AI systems playing games. Systems that “learn” by self-play — that is, one copy of the program playing another copy of the same program — leverage computational power, not human knowledge. DeepMind’s AlphaZero demonstrated that self-play can enable a program/system to learn to play not just one game but multiple games (although one copy only “knows” one game type).

“Search and learning are the two most important classes of techniques for utilizing massive amounts of computation in AI research.”

—Richard S. Sutton

Games, of course, are not the only domain in which we can see the advantages of computational power. Sutton notes that breakthroughs in speech recognition and image recognition came from application of statistical methods to huge training datasets.

Trying to make systems “that worked the way the researchers thought their own minds worked” was a waste of time, Sutton wrote — although I think today’s systems are still using layers of units (sometimes called “artificial neurons”) that connect to multiple units in other layers, and that architecture was inspired by what we do know about how brains work. Modifications (governed by algorithms, not adjusted by humans) to the connections between units constitute the “learning” that has proved to be so successful.

“[B]reakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning.”

—Richard S. Sutton

I don’t think this lesson is actually bitter, because what Sutton is saying is that human brains (and human minds, and human thinking, and human creativity) are really, really complex, and so we can’t figure out how to make the same things happen in, or with, a machine. We can produce better and more useful outputs thanks to improved computational methods, but we can’t make the machines better by sharing with them what we know — or trying to teach them how we may think.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.