Many academic papers about artificial intelligence are focused on a narrow domain or one specific application. In trying to get a grip on the uses of AI in the field of journalism, often we find that one paper bears no similarity to the next, and that makes it hard to talk about AI in journalism comprehensively or in a general sense. We also find that large sections of some papers in this area are more speculative than practical, discussing what could be more than what exists today.

In this post I will summarize two papers that are focused on uses of AI in journalism that do actually exist. These two papers also do a good job of putting into context the disparate applications relevant to journalism work and journalism products.

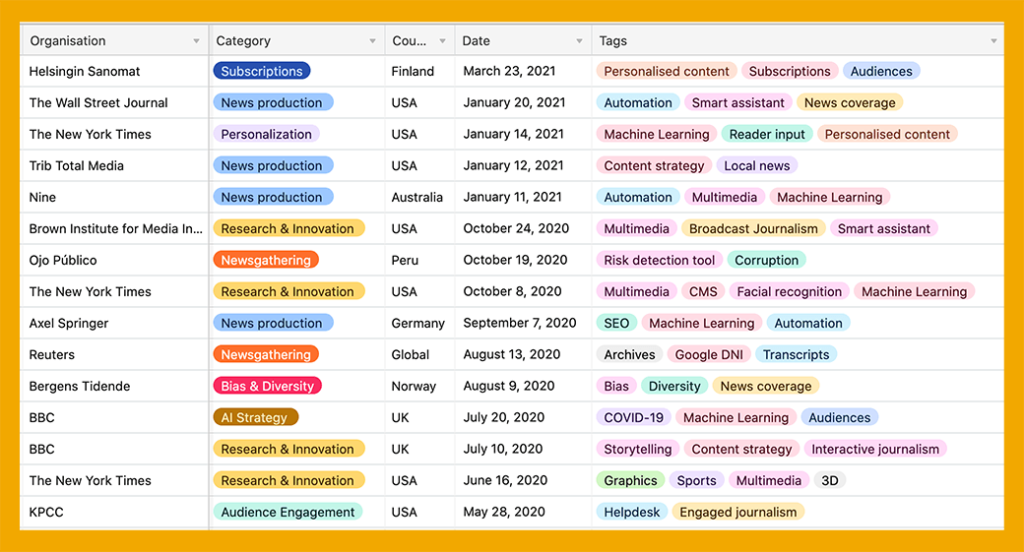

In the first paper, Artificial Intelligence in News Media: Current Perceptions and Future Outlook (2022; open access), the authors examined 102 case studies from a dataset compiled at JournalismAI, an international initiative based at the London School of Economics. They classified the projects according to seven “major areas” or subfields of AI:

- Machine learning

- Natural language processing (NLP)

- Speech recognition

- Expert systems

- Planning, scheduling, and optimization

- Robotics

- Computer vision

I could quibble with the categories, especially as systems in categories 2, 3, 5, 6 and 7 often rely on machine learning. The authors did acknowledge that planning, scheduling, and optimization “is commonly applied in conjunction with machine learning.” They also admit that some of the projects incorporated more than one subfield of AI.

According to the authors, three subfields were missing altogether from the journalism projects in their dataset: expert systems, speech recognition, and robotics.

Use of machine learning was common in projects related to increasing users’ engagement with news apps or websites, and in efforts to retain subscribers. These projects included recommendation engines and flexible paywalls “that bend to the individual reader or predict subscription cancellation.”

Uses of computer vision were quite varied. Several projects used it with satellite imagery to detect changes over time. The New York Times used computer vision algorithms for the 2020 Summer Olympics to analyze and compare movements of athletes in events such as gymnastics. Reuters used image recognition to enhance in-house searches of the company’s vast video archive (note, speech-to-text transcripts for video was also part of this project). More than one news organization is using computer vision to detect fake images.

Interestingly, automated stories were categorized as planning, scheduling, and optimization rather than as NLP. It’s true that the day-to-day automation of various reports on financial statements, sporting events, real estate sales, etc., across a range of news organizations is handled with story templates — but the language in each story is adjusted algorithmically, and those algorithms have come at least in part from NLP.

The authors noted that within their limited sample, few projects involved social bots. “Most of the bots that we researched were news bots that write stories,” they said. It is true that “social bots such as Twitter bots do not necessarily use AI” — but in that case, the bot is going to use a rule-based system or de facto expert system, a category of AI the authors said was missing from the dataset.

Most of the projects in the dataset relied on external funding, and mainly from one source: Google’s Digital News Innovation Fund grants.

One thing I like about this research is that it does not conflate artificial intelligence and data journalism — which in my view is a serious flaw in much of the literature about AI in journalism. You might notice that in the foregoing summary, the only instances of AI contributing information to stories involved use of satellite imagery.

The authors of the article discussed above are Mathias-Felipe de-Lima-Santos of the University of Navarra, Spain, and Wilson Ceron of the Federal University of São Paulo, Brazil.

What about using AI as part of data journalism?

In an article published in 2019, Making Artificial Intelligence Work for Investigative Journalism, Jonathan Stray (now a visiting scholar at the UC Berkeley Center for Human-Compatible AI) authoritatively debunked the myth that data journalists are routinely using AI (or soon will be), and he explained why. Two very simple reasons bear mention at the outset:

- Most journalism investigations are unique. That precludes the time, expense and expertise required to develop an AI solution or tool to aid in one investigation, because it likely would not be usable in any other investigation.

- Journalists’ salaries are far lower than the salaries of AI developers and data scientists. A news organization won’t hire AI experts to develop systems to aid in journalism investigations.

Data journalists do use a number of digital tools for cleaning, analyzing, and visualizing data, but it must be said that almost all of these tools are not part of what is called artificial intelligence. Spreadsheets, for example, are essential in data journalism but a far cry from AI. Stray points to other tools — for extracting information from digitized documents, or finding and eliminating duplicate records in datasets (e.g. with Dedupe.io). The line gets fuzzy when the journalist needs to train the tool so that it learns the particulars of the given dataset — by definition, that is machine learning. This training of an already-built tool, however, is immensely simpler than the thousands or even millions of training epochs overseen by computer scientists who develop new AI systems.

Stray clarifies his focus as “the application of AI theory and methods to problems that are unique to investigative reporting, or at least unsolved elsewhere.” He identifies these categories for successful uses of AI in journalism so far:

- Document classification

- Language analysis

- Breaking news detection

- Lead generation

- Data cleaning

Stray’s journalism examples are cases covered previously. He acknowledges that the “same small set of examples is repeatedly discussed at data journalism conferences” and this “suggests that there are a relatively small number of cases in total” (page 1080).

Supervised document classification is a method for sorting a large number of documents into groups. For investigative journalists, this separates documents likely to be useful from others that are far less likely to be useful; human examination of the “likely” group is still needed.

By language analysis, Stray means use of natural language processing (NLP) techniques. These include unsupervised methods of sorting documents (or forum comments, social media posts, emails) into groups based on similarity (topic modeling, clustering), or determining sentiment (positive/negative, for/against, toxic/nontoxic), or other criteria. Language models, for example, can identify “named entities” such as people or “nationalities or religious or political groups” (NORP) or companies.

Breaking news detection: The standard example is the Reuters Tracer system, which monitors Twitter and alerts journalists to news events. The advantage is getting a head start of as much as 18 minutes over other news organizations that will cover the same event. I am not sure whether any other organization has ever developed a comparable system.

Lead generation is not exactly story discovery but more like “Here’s something you might want to investigate further.” It might pan out; it might not. Stray’s examples here are a bit weak, in my opinion, but the one for using face recognition to detect members of the U.S. Congress in photos uploaded by the public does set the imagination running.

Data cleaning is always necessary, usually tedious, and often takes more time than any other part of the reporting process. It makes me laugh when I hear data-science educators talk about giving their students nice, clean datasets, because real data in the real world is always dirty, and you cannot analyze it properly until it has been cleaned. Data journalists talk about this incessantly, and about reliable techniques not only for cleaning data but also for documenting every step of the process. Stray does not provide examples of using AI for data cleaning, but he devotes a portion of his article to this and data “wrangling” as areas he deems most suitable for AI solutions in the future.

When documents are extremely diverse in format and/or structure (e.g. because they come from different entities and/or were created for different purposes), it can be very difficult to extract data from them in any useful way (for example: names of people, street addresses, criminal charges) unless humans do it by hand. Stray calls it “a challenging research problem” (page 1090). Another challenge is linking disparate documents to one another, for which the ultimate case to date is the Panama Papers. Network analysis can be used (after named entities are extracted), but linkages will still need to be checked by humans.

Stray also (quite interestingly) wrote about what would be needed if AI systems were to determine newsworthiness — the elusive quality that all journalists swear they can recognize (much like Supreme Court Justice Potter Stewart’s famous claim about obscenity).

Conclusions

From my reading so far, I think there are two major applications of AI in the journalism field actually operating at present: production of automated news stories (within limited frameworks), and purpose-built systems for manipulating the content choices offered to users (recommendations and personalization). Automated stories or “robot journalism” have been around for at least seven or eight years now and have been written about extensively.

I’ve read (elsewhere) about efforts to catalog and mine gigantic archives of both video and photographs, and even to produce fully automated videos with machine-generated voiceover narration, but I think those are corporate strategies to extract value from existing resources rather than something intended to produce new journalism in the public interest. I also think those efforts might be taking place mainly outside the journalism area by now.

One thing that’s clear: The typical needs of an investigative journalism project (the highest-cost and possibly most important kind of journalism) are not easily solved by AI, even today. In spite of great advances in NLP, giant collections of documents must still be acquired piecemeal by humans, and while NLP can help with some parts of extracting valuable information from documents, in the end these stories require a great deal of human labor and time.

Another area not addressed in either of the two articles discussed here is verification and fact-checking. The ClaimReview Project is one approach to this, but it is powered by human fact-checkers, not AI. See also the conference paper The Quest to Automate Fact-Checking, presented at the 2015 Computation + Journalism Symposium.

.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.