The system described in this wonderful New Yorker article from March 2021 is NOT a neural network, and that’s one of the things that make it fascinating. I’ve written before about ImageNet and how neural networks, trained on humongous datasets of labeled digital images, are able to very accurately say what is in a photograph that the system has never “seen” before.

This is different.

This system, developed by a small company in Japan, does not require hundreds or thousands of images of each object it needs to identify precisely because it doesn’t use a neural network. The technologies it uses can be called good old-fashioned AI (GOFAI). Essentially it consists of a collection of manually constructed algorithms.

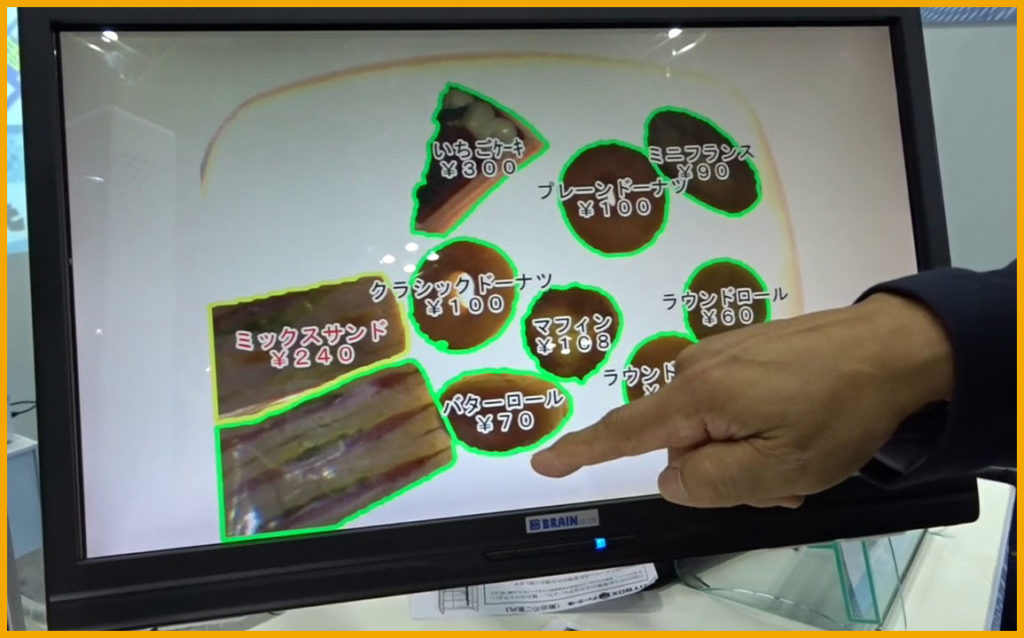

The system also “learns,” but not in the typical black-box sense of today’s machine learning systems. It is widely used in the checkout systems of Japanese bakeries, which offer a bewilderingly large assortment of pastries and small bread items, many of which look quite similar to one another. BakeryScan was released in 2013; it was 15 years in development.

More recently, the bakery system has been adapted to recognize specific types of cancer cells. The new system is able to “look at an entire microscope slide and identify the cells that might be cancerous” (source: The New Yorker article).

Rather than summarizing the article further, I’m just going to urge you to read it. It’s very much worth your time.

AI in Media and Society by Mindy McAdams is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Include the author’s name (Mindy McAdams) and a link to the original post in any reuse of this content.

.